Debugging Akka.NET

Note

This article is intended to provide advice to OSS contributors working on Akka.NET itself, not necessarily end-users of the software. End users might still find this advice helpful, however.

Racy Unit Tests

Akka.NET's test suite is quite large and periodically experiences intermittent "racy" failures as a result of various issues. This is a problem for the project as a whole because it causes us not to carefully investigate periodic and intermittent test failures as thoroughly as we should.

You can view the test flip rate report for Akka.NET on Azure DevOps here.

What are some common reasons that test flip? How can we debug or fix them?

Expecting Messages in Fixed Orders

One common reason for tests to experience high flip rates is that they expect events to happen in a fixed order, whereas due to arbitrary scheduling that's not always the case.

For example:

public void PoorOrderingSpec()

{

IActorRef CreateForwarder(IActorRef actorRef)

{

return Sys.ActorOf(act =>

{

act.ReceiveAny((o, _) =>

{

actorRef.Forward(o);

});

});

}

// arrange

IActorRef a1 = Sys.ActorOf(act =>

act.Receive<string>((str, context) =>

{

context.Sender.Tell(str + "a1");

}), "a1");

IActorRef a2 = CreateForwarder(a1);

IActorRef a3 = CreateForwarder(a1);

// act

a2.Tell("hit1");

a3.Tell("hit2");

// assert

/*

* RACY: no guarantee that a2 gets scheduled ahead of a3.

* That depends entirely upon the ThreadPool and the dispatcher.

*/

ExpectMsg("hit1a1");

ExpectMsg("hit2a1");

}

The fundamental mistake this spec author made was using simple ordering assumptions: messages are processed in the order in which they're called. This is true per actor, not true for all actors in the given process. Once we split the traffic between more than one actor's mailbox all of our ordering assumptions go out the window.

How do we fix this? Two possible ways.

public void FixedOrderingSpec()

{

IActorRef CreateForwarder(IActorRef actorRef)

{

return Sys.ActorOf(act =>

{

act.ReceiveAny((o, _) =>

{

actorRef.Forward(o);

});

});

}

// arrange

IActorRef a1 = Sys.ActorOf(act =>

act.Receive<string>((str, context) =>

{

context.Sender.Tell(str + "a1");

}), "a1");

IActorRef a2 = CreateForwarder(a1);

IActorRef a3 = CreateForwarder(a1);

// act

a2.Tell("hit1");

a3.Tell("hit2");

// assert

// no raciness - ExpectMsgAllOf doesn't care about order

ExpectMsgAllOf(new []{ "hit1a1", "hit2a1" });

}

The simplest way in this case is to just change the assertion to an ExpectMsgAllOf call, which expects an array of messages back but doesn't care about the order in which they arrive. This approach may not work in all cases, so the second approach we recommend to fixing these types of buggy tests will usually do the trick.

public void SplitOrderingSpec()

{

IActorRef CreateForwarder(IActorRef actorRef)

{

return Sys.ActorOf(act =>

{

act.ReceiveAny((o, _) =>

{

actorRef.Forward(o);

});

});

}

// arrange

IActorRef a1 = Sys.ActorOf(act =>

act.Receive<string>((str, context) =>

{

context.Sender.Tell(str + "a1");

}), "a1");

TestProbe p2 = CreateTestProbe();

IActorRef a2 = CreateForwarder(a1);

TestProbe p3 = CreateTestProbe();

IActorRef a3 = CreateForwarder(a1);

// act

// manually set the sender - one to each TestProbe

a2.Tell("hit1", p2);

a3.Tell("hit2", p3);

// assert

// no raciness - both probes can process their own messages in parallel

p2.ExpectMsg("hit1a1");

p3.ExpectMsg("hit2a1");

}

In this approach we split the assertions up across multiple TestProbe instances - that way we're not coupling each input activity to the same output mailbox. This is a more generalized approach for solving these ordering problems.

Not Accounting for System Message Processing Order

An important caveat when working with Akka.NET actors: system messages always get processed ahead of user-defined messages. Context.Watch or Context.Stop are examples of methods frequently called from user code which produce system messages.

Thus, we can get into trouble if we aren't careful about how we write our tests.

An example of a buggy test:

public void PoorSystemMessagingOrderingSpec()

{

// arrange

var myActor = Sys.ActorOf(act => act.ReceiveAny((o, context) =>

{

context.Sender.Tell(o);

}), "echo");

// act

Watch(myActor); // deathwatch

myActor.Tell("hit");

Sys.Stop(myActor);

// assert

ExpectMsg("hit");

ExpectTerminated(myActor); // RACY

/*

* Sys.Stop sends a system message. If "echo" actor hasn't been scheduled to run yet,

* then the Stop command might get processed first since system messages have priority.

*/

}

Because system messages jump the line there is no guarantee that this actor will ever successfully process their system message - it depends on the whims on the ThreadPool and how long it takes this actor to get activated, hence why it's racy.

There are various ways to rewrite this test to function correctly without any raciness, but the easiest way to do this is to re-arrange the assertions:

public void CorrectSystemMessagingOrderingSpec()

{

// arrange

var myActor = Sys.ActorOf(act => act.ReceiveAny((o, context) =>

{

context.Sender.Tell(o);

}), "echo");

// act

Watch(myActor); // deathwatch

myActor.Tell("hit");

// assert

ExpectMsg("hit");

Sys.Stop(myActor); // terminate after asserting processing

ExpectTerminated(myActor);

}

In the case of Context.Watch and ExpectTerminated, there's a second way we can rewrite this test which doesn't require us to alter the fundamental structure of the original buggy test:

public void PoisonPillSystemMessagingOrderingSpec()

{

// arrange

var myActor = Sys.ActorOf(act => act.ReceiveAny((o, context) =>

{

context.Sender.Tell(o);

}), "echo");

// act

Watch(myActor); // deathwatch

myActor.Tell("hit");

// use PoisonPill to shut down actor instead;

// eliminates raciness as it passes through /user

// queue instead of /system queue.

myActor.Tell(PoisonPill.Instance);

// assert

ExpectMsg("hit");

ExpectTerminated(myActor); // works as expected

}

The bottom line in this case is that specs can be racy because system messages don't follow the ordering guarantees of the other 99.99999% of user messages. This particular issue is most likely to occur when you're writing specs that look for Terminated messages or ones that test supervision strategies, both of which necessitate system messages behind the scenes.

Timed Assertions

Time-delimited assertions are the biggest source of racy unit tests generally, not just inside the Akka.NET project. These types of issues tend to come up most often inside our Akka.Streams.Tests project with tests that look like this:

public void TooTightTimingSpec()

{

Task<IImmutableList<IEnumerable<int>>> t = Source.From(Enumerable.Range(1, 10))

.GroupedWithin(1, TimeSpan.FromDays(1))

.Throttle(1, TimeSpan.FromMilliseconds(110), 0, Akka.Streams.ThrottleMode.Shaping)

.RunWith(Sink.Seq<IEnumerable<int>>(), Sys.Materializer());

t.Wait(TimeSpan.FromSeconds(3)).Should().BeTrue();

t.Result.Should().BeEquivalentTo(Enumerable.Range(1, 10).Select(i => new List<int> {i}));

}

This spec is a real test from Akka.Streams.Tests at the time this document was written. It designed to test the backpressure mechanics of the GroupedWithin stage, hence the usage of the Throttle flow. Unfortunately this stage depends on the scheduler running behind the scenes at a fixed interval and sometimes, especially on a busy Azure DevOps agent, that scheduler will not be able to hit its intervals precisely. Thus, this test will fail periodically.

Thus there are a few ways we can fix this spec:

- Use

awaitinstead ofTask.Wait- generally we should be doing this everywhere when possible; - Relax the timing constraints either by increasing the wait period or by wrapping the assertion block inside an

AwaitAssert; or - Use the

TestSchedulerand manually advance the clock. That might cause other problems but it takes the non-determinism of the business of the CPU out of the picture.

Testing For Racy Unit Tests Locally

A racy test under Azure DevOps might run fine locally, and we might need to force run a test until they fail. Here are some techniques you can use to force a test to fail.

Running Tests Under Very Limited Computing Resources

Azure DevOps virtual machines ran under a very tight computing resource budget. It is sometime necessary for us to emulate that locally to test to see if our code changes would run under a very limited resource condition. The easiest way to do this is to leverage the Windows 10 WSL 2 Linux virtual machine feature.

- Install SWL 2 by following these instructions

- Create a

.wslconfigfile inC:\Users\[User Name]\ - Copy and paste these configuration.

[wsl2]

memory=2GB # Limits VM memory in WSL 2 up to 2GB

processors=2 # Makes the WSL 2 VM use two virtual processors

Rebooting wsl With Updated Settings

Once you've made your changes to .wslconfig you'll need to reboot your instance for them to take effect.

You can list all of your wsl distributions via wsl -l or wsl --list:

Windows Subsystem for Linux Distributions:

Ubuntu (Default)

docker-desktop-data

docker-desktop

In this case we need to terminate our default Ubuntu wsl instance:

wsl --terminate Ubuntu

The next time we try to launch wsl our .wslconfig settings will be active inside the environment.

Repeating a Test Until It Fails

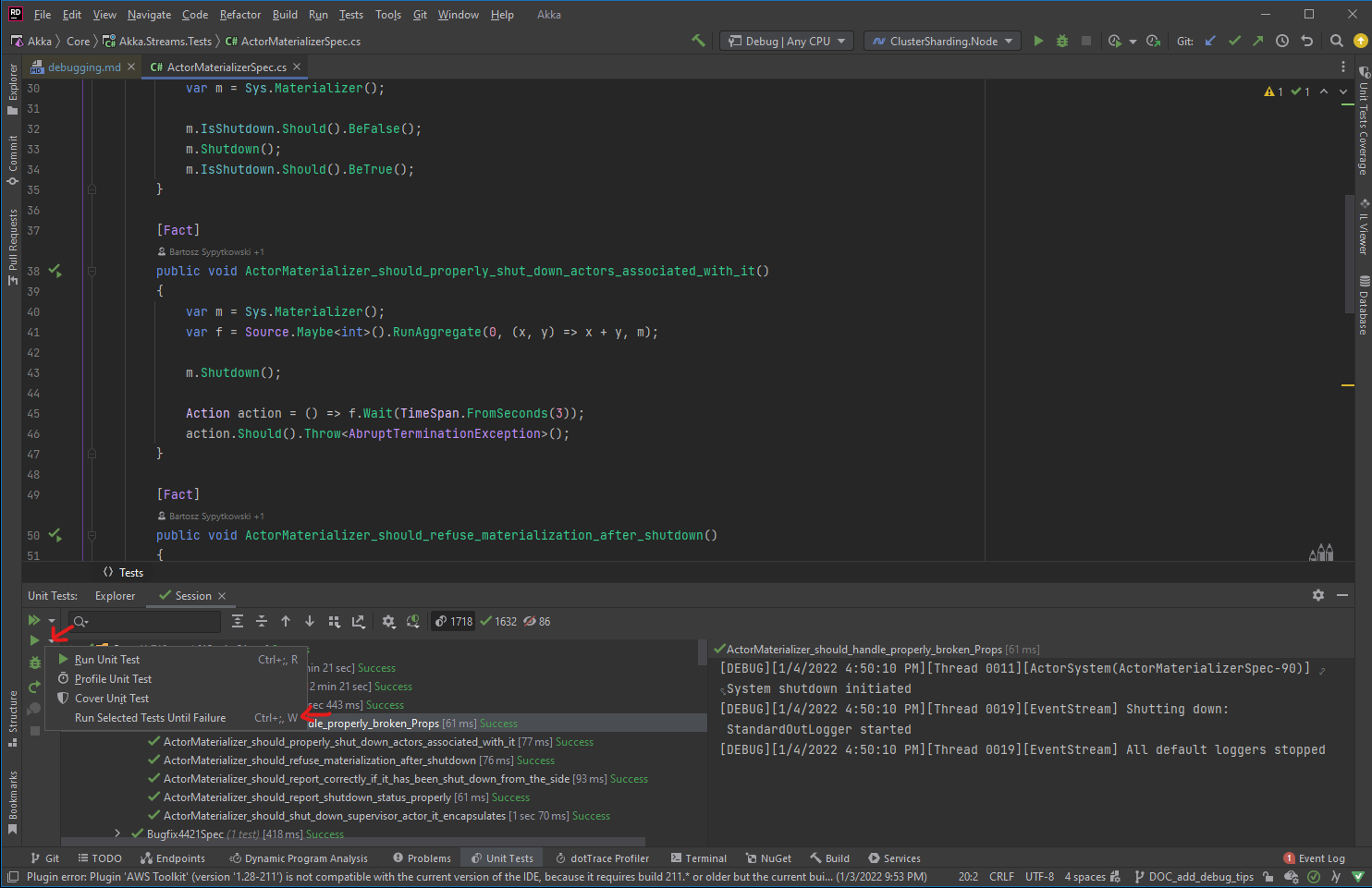

If you're using JetBrains Rider, you can use their unit test feature to run a test until it fails.

On the Unit Tests tab, click on the drop down arrow right beside the the play button and click on the

Run Selected Tests Until Failure option.

Another option is to leverage the Xunit TheoryAttribute to run a test multiple time. We provided a

convenience RepeatAttribute to do this.

using Akka.Tests.Shared.Internals;

[Theory]

[Repeat(100)]

public void RepeatedTest(int _)

{

...

}

Edit this page

Edit this page